Issue 87

Perfect lunar webpage

Hello, dear readers! 👋

In this issue, among other things:

Announcement of the new OpenAI text-to-video model

What is the "tenth man rule" and how to apply it

A 60-page report on trends in font design

How to use AI for 3D rendering

How to design Drag and Drop mechanics correctly

3 secrets for a harmonious combination of fonts

How search engine optimization standards deprive websites of their individuality

…and much more!

Enjoy reading!

🗞 News and articles

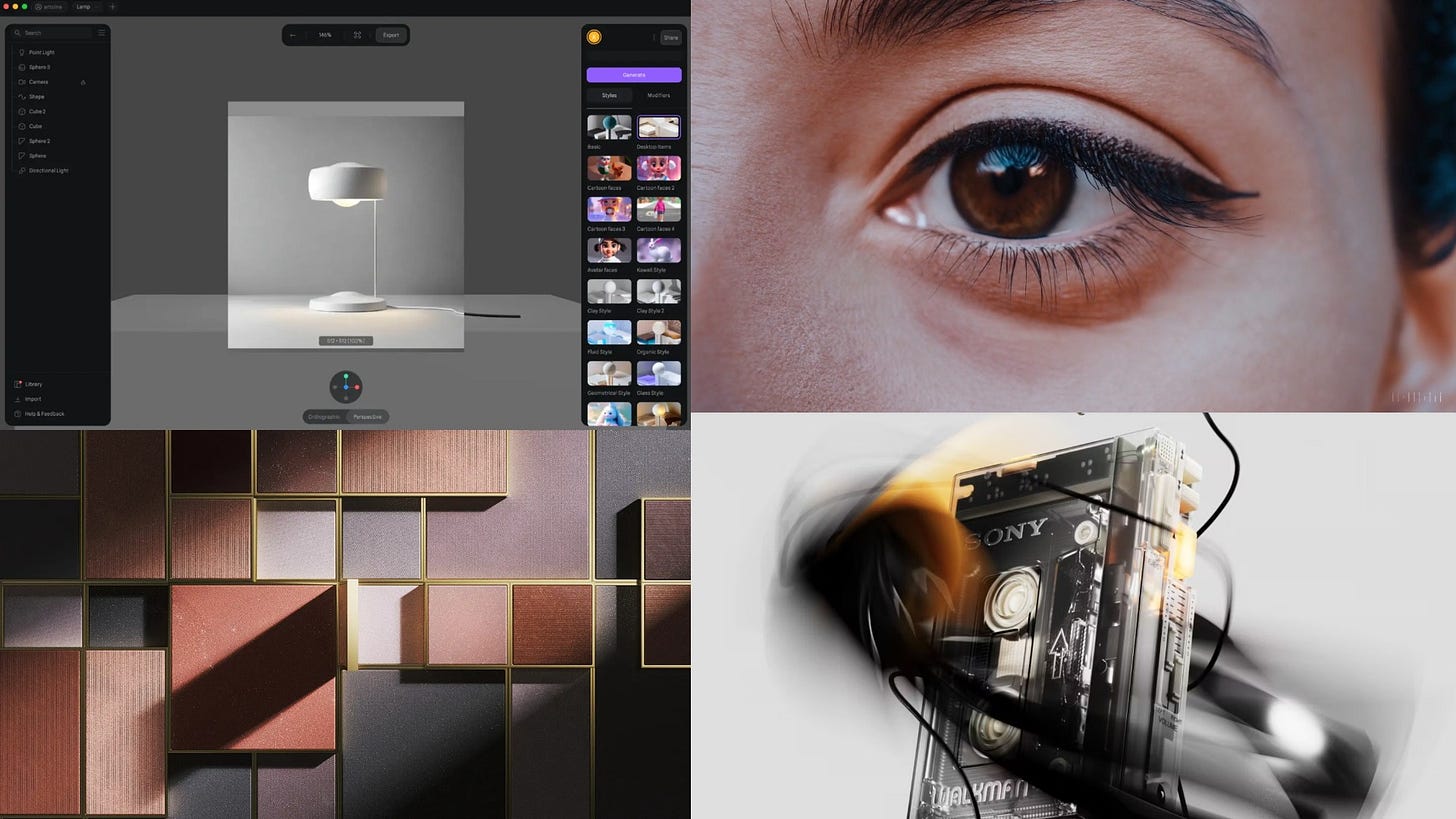

Antoine Vidal spoke about the tools that allow you to use AI for 3D rendering. Such a pipeline implies the creation of basic geometry in a 3D editor, on the basis of which the AI generates an image with premade light and textures in real time. This allows you to create concepts very quickly to test ideas, which significantly speeds up the production of 3D graphics.

In the article, he examines the combination of ControlNet, Krea and Blender, as well as Spline, Runway and other tools.

Monotype's traditional annual 60-page PDF report on trends in font design. Among other things, nostalgia for the 90s, technological fonts, deconstructionism, dynamic forms and other techniques are still relevant.

The Tenth Man Rule: How to Take Devil’s Advocacy to a New Level

Analyst Chris Meyer spoke about the "tenth man rule" or "devil's advocate" — the counterintuitive principle of collective decision-making. According to this principle, if 9 out of 10 people have one point of view, then the tenth should take the opposite one. This allows you to find an unexpected solution or identify a potential problem.

Chris gives examples of the use of this method in military leadership and in the church, as well as talks about how to apply it in his practice and what limitations exist.

Drag-and-Drop UX: Guidelines and Best Practices

Detailed article about the design of the drag-and-drop function in interfaces. Among other things, author talked about which visual components are involved in dragging and what nuances of their behavior and appearance need to be taken into account when designing. There are many visual examples in the article.

Some abstracts from the article:

Drag and Drop (or dragging) is an interaction with interface elements consisting of three operations: "capture" (drag), move and "release" (drop)

Dragging is usually used to move an object or change its size and shape. A classic example: resizing a program window or moving it

Unlike in the real world, in a digital environment it is not always clear what can be moved and where, how to capture an element and what happens after moving

If the object cannot be dragged completely, then it must have a "grab handle" — a special area highlighted with an icon. At the same time, there is currently no single visual standard for this element.

When moving an object, you can show not the entire object itself, but its simplified indicator

If the object can be dragged, then when the cursor is hovered, it can be highlighted, inviting the user to take action

If you can simultaneously click on an element for an action and drag it, then it is important to choose the right duration of the click that causes dragging

When dragging, the object should visually differ from the rest

When sorting, it is important to determine exactly when the lower elements will move apart so that the dragged object can take their place. This usually happens when the edge of the dragged object intersects with the center of the static one

If the reset zone is outside the screen, then there should be an auto-scroll of the page after the object

If the object is located above a zone forbidden for resetting, then this can be reported via the cursor state

Drag and drop should be used where it simplifies rather than complicates the work. It is also necessary to leave the option to cancel the action via Esc

⚡️ Briefly

3 secrets that will help to achieve a harmonious combination of fonts.

New technologies

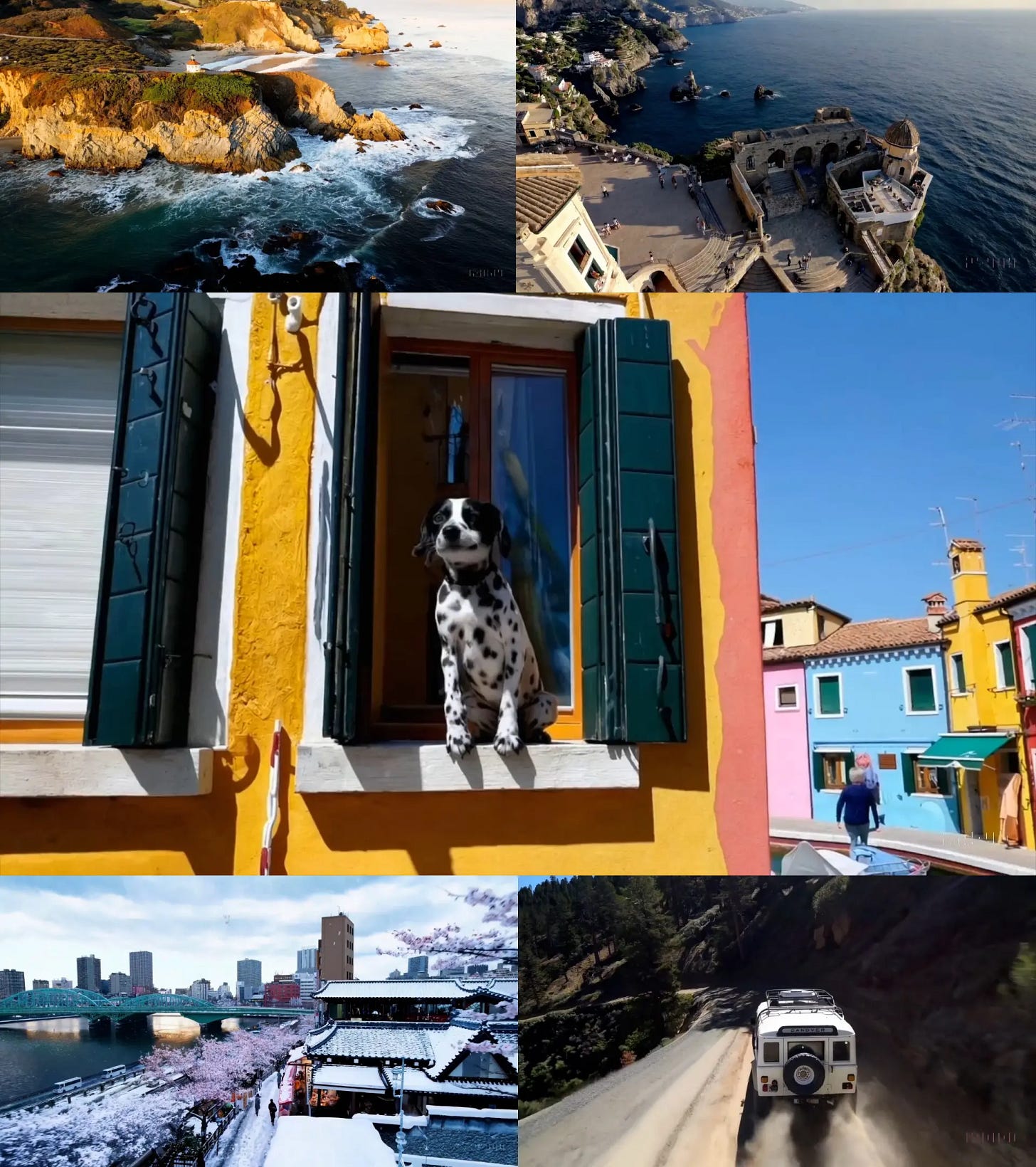

OpenAI has announced Sora, its model for generating videos based on text scripts. So far, it is available only to a select few, and the timing of open access is unknown.

But the promo page contains several dozen examples of video generation, and they look amazing. In places, they are difficult to distinguish from real filming. It looks like a real technological breakthrough. The company assures that all the examples are pure results of the model's work, without additional manipulations.

The model is capable of generating videos up to 60 seconds long in 1080p quality. And judging by the examples, she "understands" very well what is happening in the frame. The actions of the characters and objects look physical and realistic. Also, the model "remembers" all the frames, so if the object has left the frame, it can return without distortion.